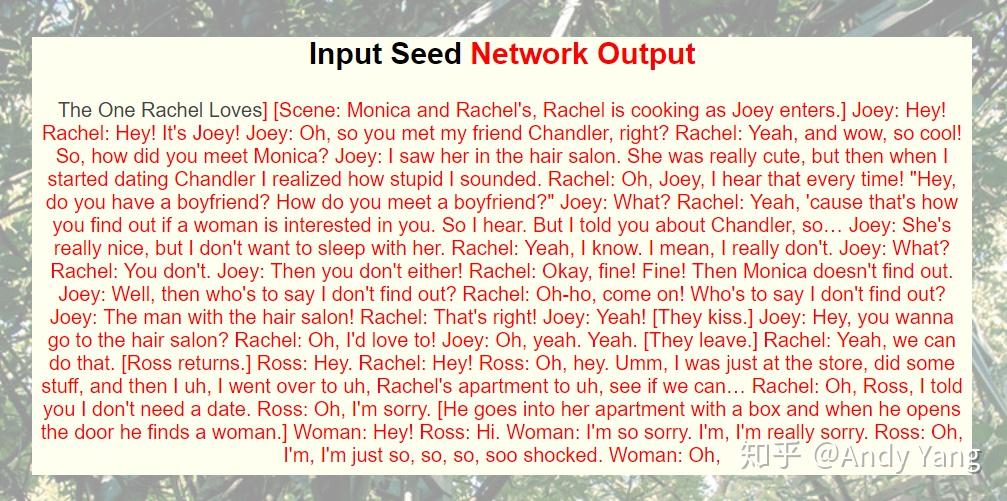

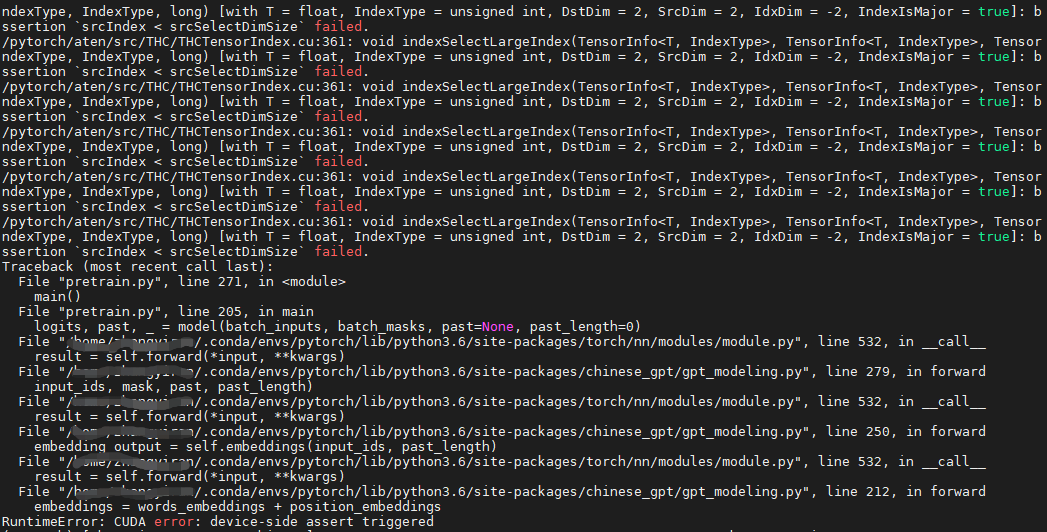

The first drawback is addressed by introducing a and token at the beginning and end for each instance of the dataset and then instructing the model to terminate the generation once that particular token has been generated. With the exception of the starting cue, the generation was uncontrolled, meaning there is no way to enforce the presence of a keyword or context in the generated text. Therefore, it is not suitable to generated shorter text (like a quick review). It was originally designed to generate long-form text until it reaches the prescribed length. Let us address two limitations of the original GPT-2 model: Since the original GPT-2 did not have any protocol to finetune the model, Neil Sheppard and then Max Woolf forked the existing model to create a finetune and generate pipline which we have used in this project. It is unwieldy to discuss the general transformer architecture here (great lecture here), and also you cannot modify the GPT-2 architecture and hence for the purpose of this post, we shall take it as a black box. However, due to constraints (primarily computation but not limited to), we use a smaller model with 124 million parameters. When it was released, the concern died due to lack of evidence of such exploitations. Initially, there was a concern that the original model could be misused for generating synthetic texts catered towards synthetic propaganda. The original model (with 1.5 billion parameters) was trained on a dataset of roughly 8 million text-documents from the internet and is curated to have the diversity that represent the naturally occurring diversity over multiple domains. While it was designed to predict the next word based on the previous words in a text, it has been shown to be capable of producing convincing synthetic texts that garnered a ‘credibility score’ of 6.91/10 (actually 6.07 in the model we used, but more on that in a bit) as evaluated by a survey at Cornell University.

GPT-2, the successor to GPT is a transformer -based language model developed by OpenAI. However, prior t o which we decided to finetune aGPT-2 language model and set up a baseline to compare CTRL and PPLM as our final model(s). In Blog Post 1, we talked about Conditional Transformer Language Model (CTRL) and Plug and Play Language Model (PPLM) - two models capable of generated texts conditioned on a sentiment and/or keyword. Huggingface for making these types of model extensions possibleĪnd a big thanks to Konstantin Buschmeier and Nikhil Nagaraj for their great effort in the Dutch and German GPT2 finetuning tracks.Īlso a huge kudos to Bert Vanhaeke for creating the stunning GPT2 web app, our eyes are still sparkling.Alex Berry, Jason Chan, Hyunjoon Lee, Sayan Samanta, Christina Ye.

#Finetune gpt2 code

This great blogpost from Pierre Guillou, from which we were able to reuse a great deal of code.The briliant tutorial on the fastai docs.We had the luck of being able to stand on the shoulders of giants with this one, so we want to extend our thanks to: We have the feeling this experiment would work much less well for example Hindi, Mandarin or Japanese. 👆 Note: while we haven’t assessed this ourselves, we have the feeling that this works well because Dutch is relatively close to English in terms of language characteristics (sentence structure, conjugation, syntax, etc.). in model language: training for other languages as well.

in model data: giving it more data per language.in model size: training larger GPT2 base models.Here comes the real beauty: since the OSCAR corpus is available in many languages, this technique is perfectly extensible towards other vocabularies as well!Īt the time of writing, we have created models in two languages:

0 kommentar(er)

0 kommentar(er)